Chatbot cheating threat is real, but manageable

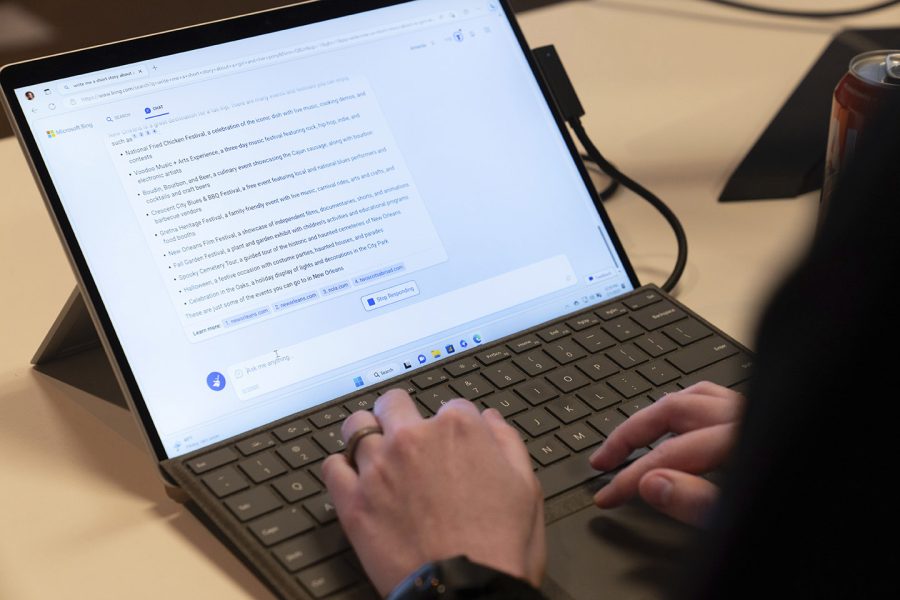

Microsoft employee Alex Buscher demonstrates a search feature integration of Microsoft Bing search engine and Edge browser with OpenAI. Microsoft has invested billions into the artificial intelligence chatbots in recent months.

February 16, 2023

University professors and administrators are scrambling to address the new challenges that artificial intelligence will pose as the technology rapidly develops and intersects with academia.

The software leading the charge in the consumer marketplace is an artificial intelligence chatbot called “ChatGPT” that has the power to draft complete original essays, solve complex math problems, and answer exam questions immediately. Chatbots are computer programs designed to simulate human conversations in a digital environment, and ChatGPT can generate human-like text for a multitude of applications.

ChatGPT poses a new challenge to professors who must now consider how it will affect academic assessment tools like essays and multiple-choice exams. Director of the Canizaro Center for Catholic Studies and assistant professor of religious studies, father Nathan O’Halloran, S.J, said that he does not believe the essay is obsolete; however, he has begun pivoting to new testing strategies.

O’Halloran said that he has returned to using oral exams as one antidote to curb cheating. He noted that the trouble with ChatGPT is that even if a student evidently used the software, it’s hard to prove. In the past, professors were able to discover cheating through plagiarism detectors like “Turnitin” that are installed on university networks, but ChatGPT has the power to circumvent detection by creating original text.

Roger White, the chair of the political science department, has been studying the development of AI closely for several years. White agrees that the value of essays will not be negated by the evolution of AI technology. He said he’s keeping an open mind about where it may take us in the near future, in part, because he believes the essay will remain a powerful conduit for sharing ideas.

“I have students who don’t speak in class. They just don’t speak. And I have some that are going to be presented in a symposium. They have a lot to say. So the essay becomes a framework for minds to communicate with each other,” White said.

University administrators across the country fear that the use of ChatGPT may encourage students to avoid critical thinking and learning altogether.

“Thinking is arriving at judgments and then generating more questions and then arriving at new judgments and then generating more questions. And it’s that process that is thinking,” O’Halloran said. “When it comes to living in the world, I have to make judgments constantly, and I will not know the difference between a good judgment and bad judgment or an authentic judgment or a prudent judgment or virtuous judgment if I’ve just abdicated the judgment making process all through college. The judgment-making process is the purpose of education.”

Using AI to complete coursework is incongruous with Loyola’s mission to pursue truth and wisdom, according to O’Halloran. He said he feels a personal responsibility to educate his students and hold true to the “Jesuit mission of educating the whole person.”

While AI might be able to offer guidance on appropriate judgments to make on subject matters or how to navigate certain situations, O’Halloran said there’s no guarantee it would be a virtuous decision because it’s an aggregated decision made on the basis of a majority. But the majority does not necessarily make the best decisions.

According to a University Faculty Senate report from last month, there was a discussion on how Loyola should respond to the development of ChatGPT and what stance should be taken on AI in the community. While neither O’Halloran nor White have heard anything from the administration yet, Patricia Murret, the associate director of public affairs, said the university is taking active steps to address the misuse of AI software.

Murret said Loyola held a workshop earlier this month to show professors what ChatGPT looks like and several tools that can be used to identify AI-generated work. She also said the university is working with Turnitin to install detection systems in campus software.

Murret said that using AI to complete classwork is a violation of the academic honor code, which prohibits students from “engaging in plagiarism, and the use of ChatGPT would be considered as such because the work of others is being used as one’s own.”

White argued that ChatGPT or not, students are given a great deal of trust, and they are expected to reciprocate with honesty.

“Most successful interactions between people are based around trust. Stop and think about it. Would you do business with people you didn’t trust?” White said. “To the extent that people are dishonest, the person they’re harming over the long term is themselves.”

Both O’Halloran and White expressed concern about the misuse of AI. O’Halloran said, “it will raise all kinds of moral questions and moral dilemmas about how it’s programmed to act and override human reasoning and things like that.”

“Consciousness is required for empathy, and you can’t actually empathize with somebody if you’re not conscious. So, if you can crank procedures and operations unconsciously without empathy, and that becomes faster and stronger than us, that could be a problem.” White said.

White also urged students to pay attention to how AI merges with other technologies in the near future, like brain-computer interfaces. Brain-computer interfaces are systems that can capture brain activity and translate them into commands for prosthetic limbs, computer cursors, and other robotic devices, for example. But the capabilities of brain-computer interfaces are still mostly unknown.

White said the idea of a full brain-computer interface would mean we are still human beings, we would still maintain our human thoughts and feelings, but we would be able to access the web directly using our brains.

“Imagine what that could do for midterms and finals,” White said.

White and other professors across the country are optimistic about what potential AI may have as a tool for educators in the future. Not all signs of the rapid AI development are negative, but as it exponentially develops, universities across the country will have to create new monitoring systems to patch holes left by this largely undiscovered technology. And Microsoft’s recent $10 billion investment in ChatGPT indicates a potential internet renaissance on the horizon.

“There’s no other time I’d rather live than right now,” White said.